Projects

Future(s) of Power – Algorithmic Power

Today, the very idea of democracy is being challenged.

The increasing influence – and power – of algorithms, is one factor currently complicating democracy as we have known it. For the third event in our Future(s) of Power series in association with Somerset House Studios, we experimented with the method of sortition to populate and hold a Citizens Assembly on Algorithmic Power. We had two reasons for doing this.

Firstly, we wanted to highlight that representative democracy – the rigmarole of elections once every five years – isn’t the only way of doing democracy. Alternative democratic methods exist. Sortition is one such alternative method; using stratified random sampling to select citizens by lot to populate assemblies or political positions. The Sortition Foundation describes the aim of sortition as an assembly, made up of a representative random sample of people, who make decisions, in an informed, fair, and deliberative setting.

Secondly, in gathering a diverse group of people together to collectively deliberate the issue of algorithmic power, we wanted to contrast collaborative human decision making with algorithmic decision making through the citizens’ assembly itself.

We chose to discuss algorithmic power, as algorithms have automated and improved much of everyday life, with the ability to handle huge datasets. But machine learning algorithms also contribute to the phenomenon of computational propaganda, where algorithms, automation and politics collide. Misinformation, botnets and fake news are used as political tools to either amplify or repress political content online. Private companies such as Cambridge Analytica are paid vast sums to help shape political campaigns. Accusations fly about Russian intervention via algorithms and botnets in both the UK’S EU Referendum and the 2016 U.S Presidential elections. Within the UK government, algorithms are already used in policy making. The government of the UAE recently launched its ambitious and somewhat utopian AI strategy to 2031. Algorithmic power is as pervasive as it is invisible.

This wasn’t Superflux’s first venture into initiating a public discourse involving citizens. Back in 2008 we held an open call to recruit eight people with diverse perspectives for our ‘Power of 8’ project. The selected eight were immersed in an in depth, collective exploration of an alternate future ecosystem, while we looked out for points of conflict and consensus amongst their varied worldviews and perspectives.

Recent citizens’ assemblies around the world have reached beyond simple decision making on a particular issue, to also focus on engaging, informing, and including the public on issues rather than simply ‘decision-making’. For example, the BurgerForum of 400 people in Germany in 2011 was as much about encouraging participating citizens to identify problems as it is responding to challenges. Another aim of the BurgerForum, was for citizens’ voices to be heard concerning political issues. Finally, including citizens in assemblies like these directly introduces those citizens to a different way of doing democracy.

RECRUITMENT AND SELECTION

This was a pilot experiment, constrained by time and budget, and we didn’t expect a perfect practice of sortition. Despite constraints, we were determined to engage as diverse a population as possible for the event in terms of ethnic background, gender identification, physical ability, and education.

In the end, there was fair representation in terms of ethnic background, gender identification and physical ability, but not at all in terms of age, geographical location or education background. Representation was probably better than if we had allocated tickets on a first come first serve basis, but couldn’t be said to be representative of the general population. In the end, 33 citizens joined us for what would be an express 90 minute “citizens assembly”.

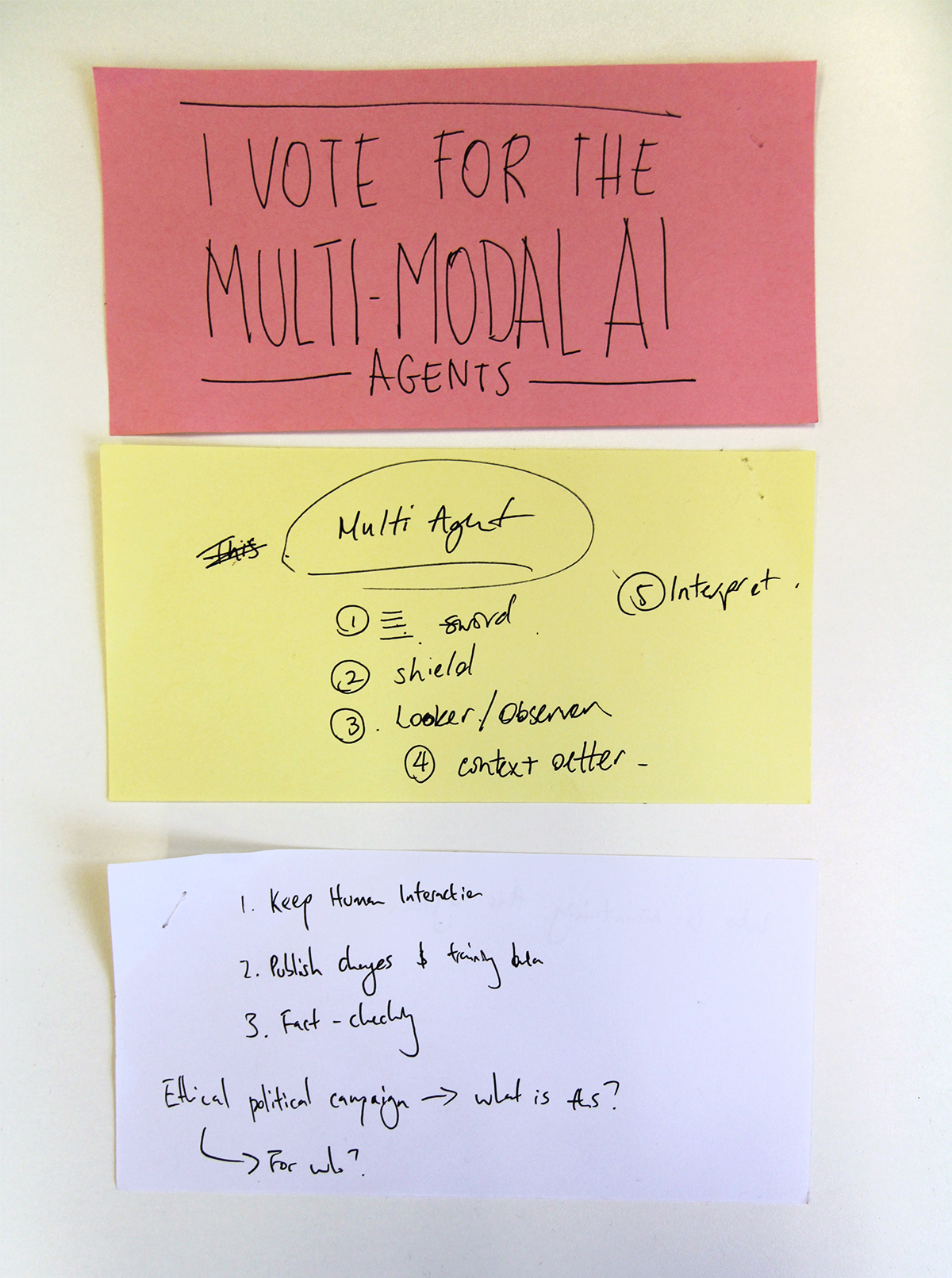

Professor Jun Wang explaining multi-agent AI systems

METHODOLOGY

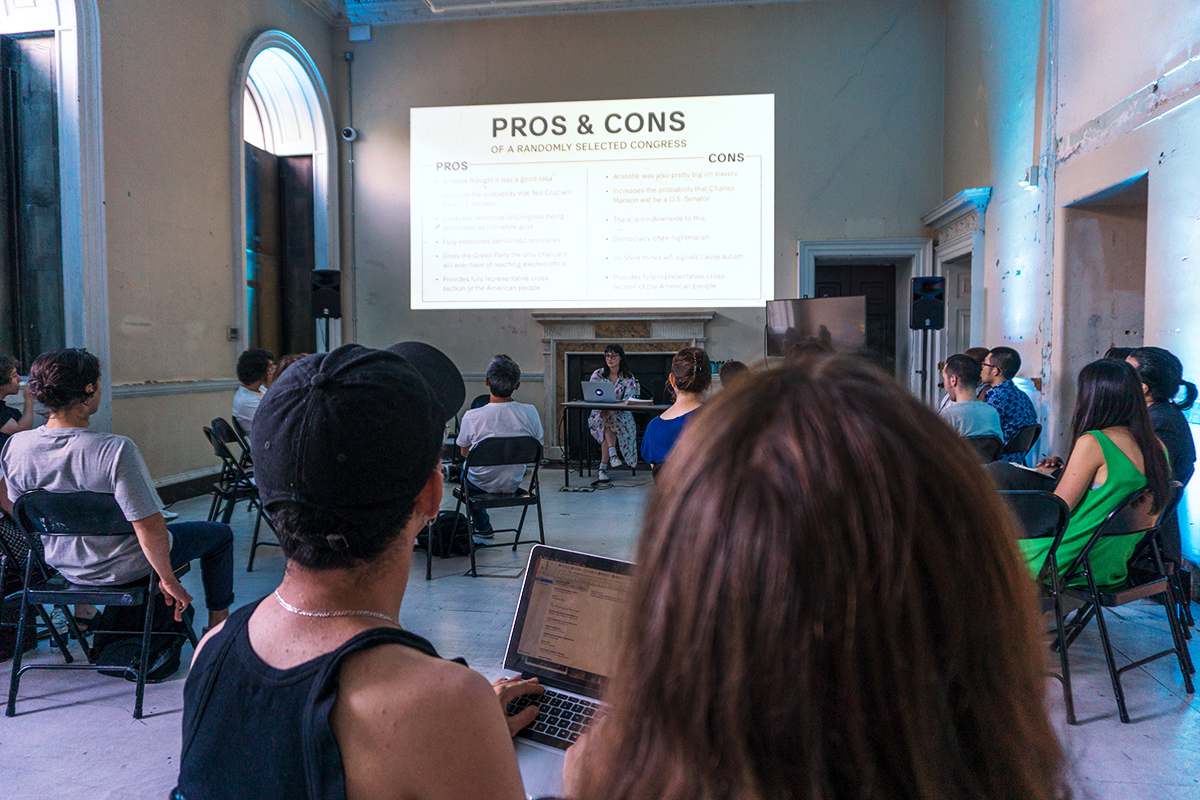

Part 1 – Background information & evidence

We were lucky to be joined by some top experts on alternative democracy, algorithmic transparency and multi-agent AI and reinforcement learning. In the short time we had, this really helped us meet the “informed, fair and deliberative setting” aspect of a citizens assembly which uses sortition. Following a deliberately neutral introduction by Danielle from Superflux to the current conditions involving manifestations of algorithmic power, we heard lightening introductions from Professor Jun Wang, Dr Stephanie Mathieson and Indra Adnan about their work. With this informative aspect of the session complete, we hoped the group had enough background on the topic from various perspectives to consider the next stage of the experiment.

Part 2 – An imagined scenario to explore

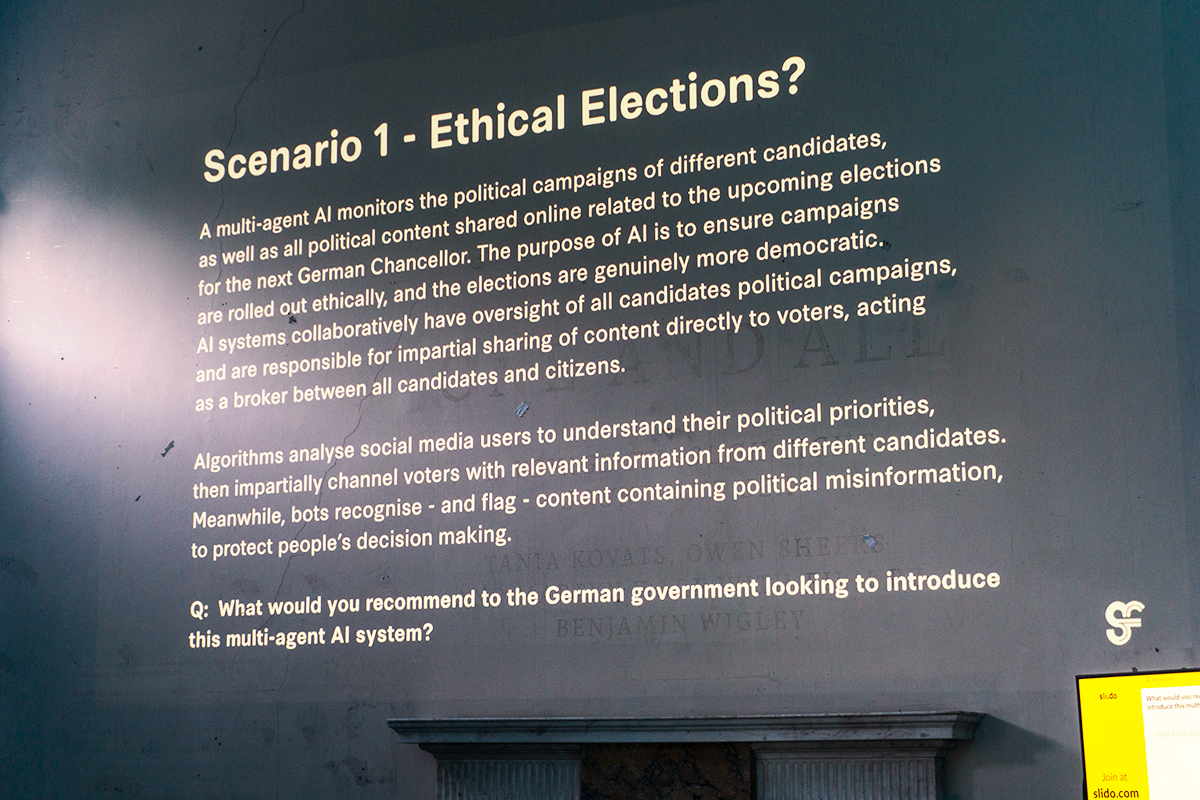

We introduced everyone to an imaginary future involving a type of algorithmic power far beyond what exists today, as a speculative thought experiment.

After talking through the scenario, we asked the group to form smaller groups and to actively mix with people they didn’t know, to collectively consider the question:

What recommendations and considerations do you propose for the German government looking to introduce this multi-agent AI system?

During the discussion, our guests and members of the Superflux team roamed between the groups to answer questions. Participants had mini notebooks to write down their thoughts on the issues and we encouraged posting questions, thoughts and proposals to sli.do, which was projected on a large screen so there was transparency beyond individual groups. To finish, we asked each group to share their thoughts with the wider assembly.

Findings & Insights

All groups quickly identified three distinct, but interrelated issues within the scenario. To acknowledge that, we will consider them separately here, too.

Issue 1 – AI monitoring and sharing content related to political campaigns

Issue 2 – AI analysing and profiling citizens on their interests

Issue 3 – Algorithmic flagging of misinformation

Beyond these three issues, there were several emergent themes.

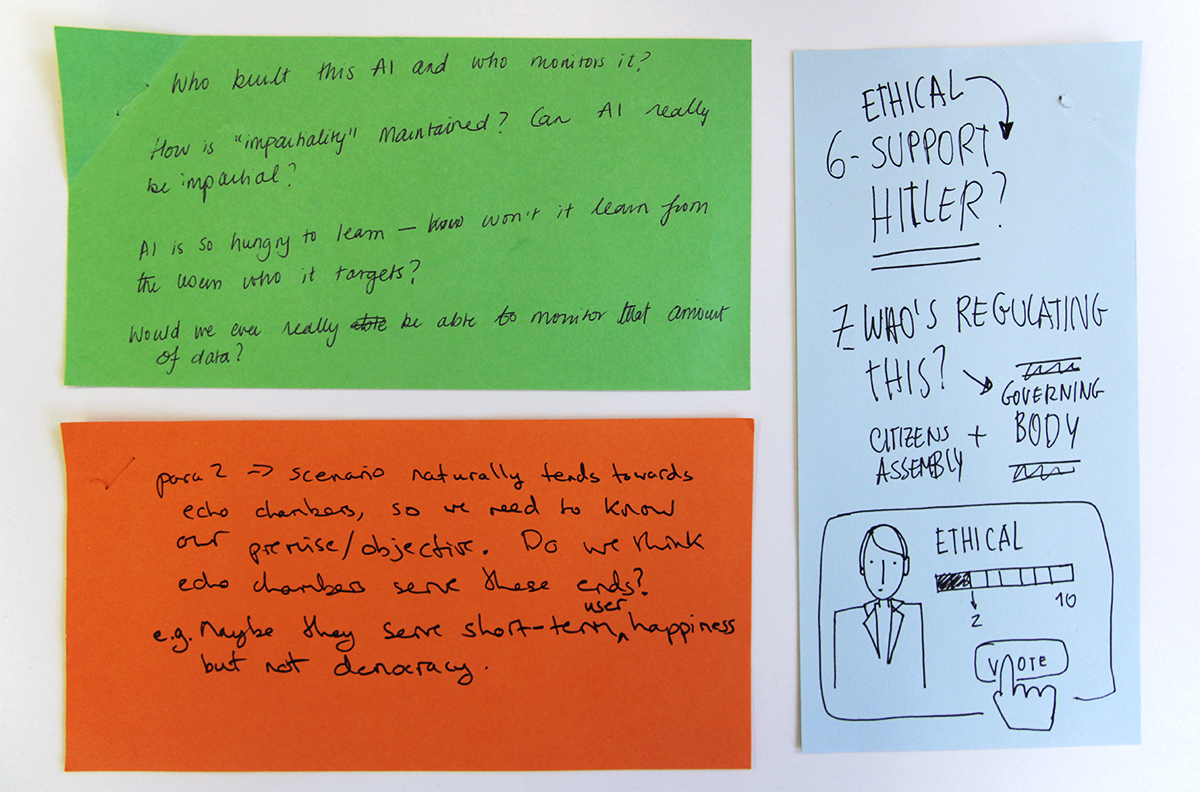

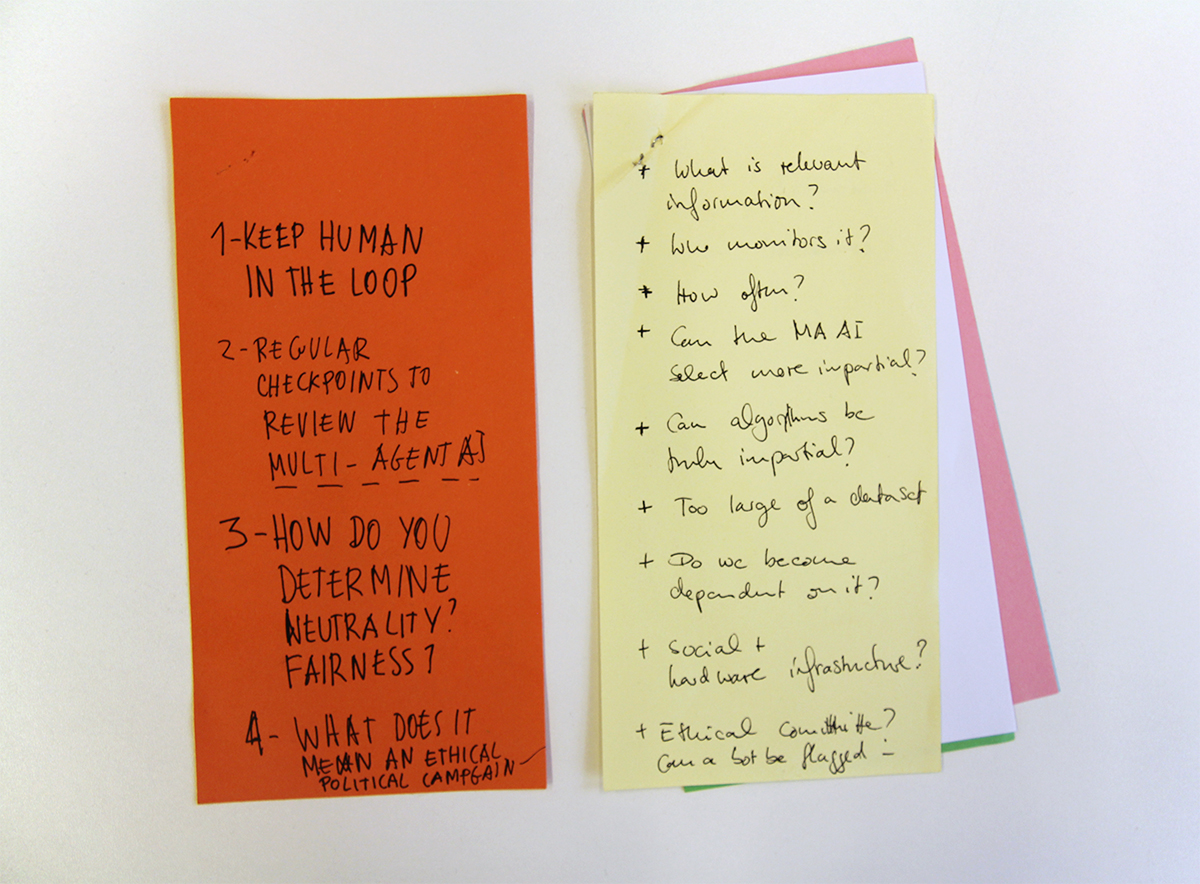

A human – multi-agent AI coalition

This theme spanned across all three issues. Suggestions ranged from the simple statement “Keep human interaction” to a suggestion directly related to Issue 3: “Dubious claims be checked by human fact-finders” as well as the recommendation:

“The multi-agent system needs to be an informant, not a decision maker, because human beings aren’t able to act democratically.”

Another participant raised a concern involving the necessity of human objective setting:

“You can’t programme machines to programme objectives (when) you aren’t”

Several participants were enthusiastic about using citizens jurys or assemblies as a solution within the scenario. One participant recommended a multi-agent system should only be allowed if it was accompanied by citizens assemblies. Other suggestions included the need for an ethical committee to oversee the MAAI system. Yet, another person questioned the practicality of an ethical committee:

“Human ethical committees – how would they keep up?”

Concerns about responsibility kept coming up, too. The question: “Who watches the watchers?” implied a need for a human regulation in the process, but there was obviously scepticism around the practicality of this requirement being satisfied, as the recommendation which immediately followed it (relating to Issue 1) outlined a system for banning search engines giving personalised searches. This participant even went into the details of describing how this might work:

“E.g. If Tommy Robinson and Akala searched ‘what are the likely consequences of Brexit?’ they’d get the same results.”

Moving on to Issue 3, and the flagging of content, the role of human moderators was suggested:

“Flagging content is a bit easier and could be corroborated by moderators.”

Once again, this suggestion of human oversight or involvement kept cropping up.

The Paradox of Impartiality

A major recurrent theme during the session focused on the MAAI’s “impartial sharing of content” outlined in Issue 1.

“Can the MA AI select more impartially?”

“How do you determine neutrality? How do you determine fairness?”

“With this tool, no arguments that aren’t obeying the rules of the political debate would emerge.”

‘The idea of impartiality in politics is perhaps a paradox. Politics needs debates and we should be wary of trying to over-neutralise people’s thinking.”

“Can an AI be impartial if it is fed by data posted by people posting their political opinions? People with strong opinions are more likely to post, so it could be fed by biases.”

“Can you have politics with complete impartiality?”

This was an important consideration, and also raises the question of voter engagement when the emotional engagement seemingly now inherent in political campaigning is removed. Would people even care about politics anymore if they were only presented with impartial stone cold facts? Which brings us to the next theme…

Audiences beyond the reach of Algorithms

From Issue 2 – the Analysing and Profiling of Citizens Interests – emerged a consideration of those who weren’t digitally connected and whom the system couldn’t reach.

“But what can it do about the problem of those who are not politically engaged and may not vote? How could we use it to encourage everyone to care?”

Not only that, but the MA AI wouldn’t have any influence beyond the digital realm. For those who aren’t digitally enabled – what happens to them? Are they still at risk?

“What about accessibility? Who is a citizen and the voting public? What about people who are undocumented?”

“What about audiences not on social media, or other platforms that might be more anonymous? What about private accounts?”

A sub-theme within this area was the issue of how people consent to only receiving impartial information.

Mitigating Against Echo Chambers vs Perpetuating Echo Chambers

Around Issue 2, several groups noted the problem of echo chambers in this scenario. However, the consideration as to whether this was good or bad varied.

“This scenario naturally tends towards echo chambers, so we need to know our premise/objective. Do we think echo chambers serve these ends? E.g maybe they serve short-term happiness but not democracy?”

This critique looks at whether the channeling of preferred information to voters creates an echo chamber and how democratic this is. Conversely, another participant noted:

“This AI could help mitigate the phenomenon of echo chambers for those with strong opinions.”

Of course, the scenario could in some case lead to the mitigation of echo chambers, and in others perpetuate them.

Flagging / Labelling misinformation

“What’s the point of bots flagging? Misinformation will always exist but is removing it more problematic? How instead, can we mitigate against misinformation in the first place?”

“Misinformation could be flagged using quite a straightforward classifier based on labelled data and user feedback.”

“How does a bot get flagged?”

Expanding the thought experiment scenario

With everyone’s imaginations on a roll, the following proposals emerged:

“What about electing this multi-agent AI as the president, then?”

“What if the AI addressed the problem of low voter turnout by assigning those who don’t vote, a vote based on their background and interests and opinions expressed through their online presence? What if we moved to trusting the AI above human judgment and asked it to choose the candidates themselves – or even the result?”

What we learned

What was most powerful about this event is that the emergent data were mainly further questions. This is significant, as currently, this is what differentiates algorithmic “intelligence” from human intelligence. Algorithms are good at following processes and giving answers. Human beings, on the other hand, are great at asking questions.

Although we were limited to 90 minutes to tackle a gigantic topic, the contributions from all participants emphasised the value of considering a particular use case for an emerging or even possible technology and then taking it even further. The initial scenario we devised as a provocation was spontaneously critically expanded upon by the participants, and it was clear how significant their questions and considerations were.

We were really struck by how actively engaged discussion was both within the smaller groups, and even within the whole group thoughts sharing session at the end. Nearly all participants contributed rather than a single dominant representative from each group.

The value of engaging members of the public on this issue was even greater than we imagined. We’d love to see what might happen if a citizens assembly on the issue was carried out beyond a quick, 90 minute introductory simulation.

Further reading

Artificial Intelligence can save Democracy, unless it destroys it first

What happens when a computer runs your life

Interestingly, our friends and collaborators at CCCB posted an article on augmented / automated democracy the very same day we held the Citizens Assembly.